AI has hacked the operating system of human civilization

In the ongoing discussion about the social impact of artificial intelligence (AI), one encounters both critics and proponents of AI. Among the supporters, broadly speaking, there are two primary perspectives that were distinguished decades ago by philosopher John R. Searle in his famous article Minds, brains, and programs. These perspectives are divided into the concepts of “strong” AI, which suggests AI can possess genuine understanding and consciousness, and “weak” AI, indicating AI’s capabilities are limited to simulating human cognition:

“According to weak AI, the principal value of the computer in the study of the mind is that it gives US a very powerful tool…But according to strong AI, the computer is not merely a tool in the study of the mind; rather, the appropriately programmed computer really is a mind, in the sense that computers given the right programs can be literally said to understand and have other cognitive states. (p. 417)”

While considering this topic in depth requires significant effort and time, I currently aim to explore a particularly intriguing perspective: those holding critical -I would say apocalyptic- perspectives on artificial intelligence (AI). It’s necessary to acknowledge a paradoxical occurrence here. Within this realm, we encounter individuals who, despite their negative views, ground their analysis in a “strong” understanding of AI.

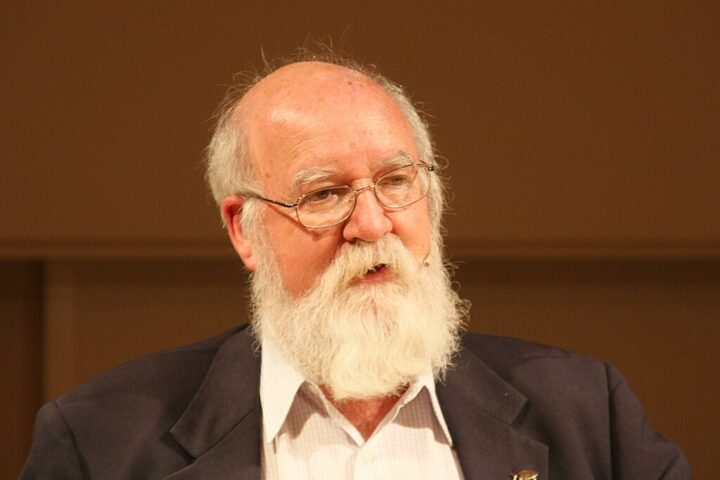

Therefore, I will dwell briefly on the ideas of the writer and professor Yuval Noah Harari. His reflections on AI are both enlightening and challenging, making him one of the representatives of the widespread skepticism surrounding AI and its consequences for humanity. His best work on this subject is a concise article for The Economist, titled AI has hacked the operating system of human civilization. This piece stands out as an exceptional example of comprehending the rage against machines. Moreover, it’s a concise, direct, clear, and well-structured text.

Rage against the machines

In that article, Harari posits that recent advances in AI, especially in Natural Language Processing (NLP) technologies such as ChatGPT, pose unprecedented threats to human civilization. He argues that AI’s ability to manipulate and generate human-like language could undermine foundational cultural artifacts – laws, institutions, and artistic expressions-that are deeply intertwined with language.

According to Harari,

“…over the past couple of years new AI tools have emerged that threaten the survival of human civilization from an unexpected direction. AI has gained some remarkable abilities to manipulate and generate language, whether with words, sounds, or images. AI has thereby hacked the operating system of our civilization.”

In other words, AI’s language proficiency challenges our creative and legislative domains and threatens to erode the fabric of human interaction and democracy by fostering fake intimacy and manipulating public discourse.

“What would happen once a non-human intelligence becomes better than the average human at telling stories, composing melodies, drawing images, and writing laws and scriptures? When people think about ChatGPT and other new AI tools, they are often drawn to examples like schoolchildren using AI to write their essays. What will happen to the school system when kids do that? But this kind of question misses the big picture. Forget about school essays. Think of the next American presidential race in 2024, and try to imagine the impact of AI tools that can be made to mass-produce political content, fake news stories, and scriptures for new cults.”

And later, he adds,

“Democracy is a conversation, and conversations rely on language. When AI hacks language, it could destroy our ability to have meaningful conversations, thereby destroying democracy.”

Naturally, the problem here lies not in the identification of the issues but in the consequences to be drawn from them. Moreover, his position is not new. Since the beginning of AI as a field of study, it has gathered detractors and supporters. In other words, discoveries and tools such as ChatGPT have only increased the attention we devote to it. In Harari’s specific case, this perspective could be criticized for anthropomorphizing AI, attributing to it a conscience and a moral responsibility that it does not possess. “For millennia human beings have lived inside the dreams of other humans. In the coming decades, we might find ourselves living inside the dreams of an alien intelligence,” he says.

Harari’s diagnosis appears to conflate the tool (AI) with its users (humans), leading to a skewed perception of the threat landscape. My point here is that, regardless of the problems he identifies, most of which are really important, we would be making a mistake if we subjectivize AI and forget human responsibility and the system in which AI emerges and develops. At its core, ChatGPT -the most famous system- is a mirror that projects our own linguistic and cognitive patterns, not an autonomous entity capable of intentional “hacking” or manipulation. At least not now. [1]

Any of today’s more famous models to which Harari refers have not only been written and developed by humans but their operations are intimately linked to the indication we give them and what we want from them. At a fundamental level, ChatGPT as a language model works closely with the users, and they should input a prompt to obtain an answer. It is in this dialectical process that ChatGPT can print answers in the form of poems, songs, reports, news, abstracts, and other forms of texts.

ChatGPT, Prompting, and Large Language Models (LLMs)

A prompt is a mode of interaction between a human and a large language model that allows the latter to generate the expected output. This interaction can take the form of a query, code snippets, or examples. In other words, the prompt takes the form of a text that we use to “communicate” with the machine or adequately speaking to produce the desired result.

Prompting, also known as Prompt Engineering, is a method for crafting inputs that direct artificial intelligence (AI) models, especially those in natural language processing and image generation, towards generating specific outcomes. It’s about converting your needs into a format the AI can understand and use to produce the results you’re after.

While describing what you want in natural language might seem simple, the reality is more complex. Different models have different capabilities, and the specificity of your instructions can significantly influence the outcome. Tasks may require detailed and precise prompts, making the process one of trial, error, and refinement to achieve the best results.

Why are we talking about prompting? My focus on prompts is no coincidence. Indeed, the capacity to emulate human communication has been a critical factor in assessing specific machines’ capabilities; the prompt is precisely the linguistic expression that allows us to study that process. Let’s take, for example, a random prompt:

Prompt:

The sky is

Output:

blueAs is evident, the system response is feeble. However, we can try something else instead and give context or more information to our prompt:

Prompt:

Complete the sentence:

The sky is

Output:

blue during the day and dark at night.[2]Thus, one key factor in our interaction with the AI models Harari refers to lies in the prompt, which consists of several components. It may include specific instructions or tasks for the model, contextual information to guide the AI to more precise answers, the input data or questions for which we seek answers, and the output indicator or the desired output type.

Knowing all this, the claim that ChatGPT has already hacked human civilization seems exaggerated. While it’s worth pondering the future possibility of general artificial intelligence, current systems, including ChatGPT, rely heavily on human-generated data and the effectiveness of our prompts. This reliance highlights the significant gap between AI capabilities and the complexity of the human brain and our knowledge base.

Views like Yuval Noah Harari’s, which suggest AI poses an imminent existential threat, often miss two critical points. Firstly, they overestimate AI’s current abilities, implying a level of independence and understanding that doesn’t exist. Secondly, such critiques underestimate human agency, our capacity for critical thought, and ethical decision-making.

Harari’s focus on AI as a source of societal disruption may overlook the real issues, namely, the human decisions that shape AI’s development, deployment, and regulation. The challenge isn’t AI itself but the conditions allowing AI to seem dominant. We must address the prevailing logic that entraps us in a world where human connections are diminished, not just about technology but within our societal and environmental contexts.

Towards a constructive engagement with AI

The dialogue around AI should not be mired in fear-mongering or deterministic projections. Still, it should instead embrace the philosophical rigor that questions, critiques, and ultimately seeks to understand the complex interplay between human and machine intelligence.

To engage constructively with AI, we must shift the discourse from a simplistic dichotomy of AI as either savior or destructor to a more complex examination of how it amplifies, extends, or challenges human values and societal norms. The real conversation should be about the frameworks we establish for AI’s development and use, ensuring that these technologies serve humanity’s best interests while mitigating potential harm.

In this light, Harari’s narrative, though attractive, might benefit from a deeper engagement with the philosophical traditions that have long grappled with the nature of language, human agency, and dialogue.

[1] The projection offered by Ajeya Cotra, associated with the nonprofit organization Open Philanthropy, is derived not from poll results but from an analysis of enduring patterns in the computational power utilized for AI training. Cotra posits that by the year 2050, there is a fifty percent likelihood of developing a human-level transformative AI system that is both achievable and economically viable, a prediction she regards as her “median scenario.” Furthermore, Cotra’s “plausible” forecasts, extending between 2040 and 2090, underscore her view that there is significant uncertainty surrounding these predictions.

[2] Example using ChatGPT 3.5 from Prompt engineering guide, at https://www.promptingguide.ai

I have a questions… From where does AI gather it’s data to write an essay? Is it social media? Is this essay of Dialektika considered social media? Does it read books on subjects? I’m assuming the book would be a downloaded version. There does seem to be a lot of fear around the subject, but that’s at least another essay if not a book. Thanks

It pulls from the internet, which is guarded by social media. So it’s opinions reflect man’s OPINIONS, not necessarily truth. Like us, it can only know the information it is fed, thus is flawed and potentially dangerous if altogether believed and accepted.

“The real conversation should be about the frameworks we establish for AI’s development and use, ensuring that these technologies serve humanity’s best interests while mitigating potential harm.” Please expand on who “we” is in this sentence. There’s the problem. “We” does not include many humans, only a few, mostly wealthy, white males.

You’re right, Carol. In fact, just today, I came across something interesting related to this. According to Forbes, Sam Altman, CEO of OpenAI, has become billionaire. And OpenAI? It has a valuation of $80 billion, with Microsoft contributing at least $13 billion, as reported by CNBC. It seems to me that this is the perfect starting point to understand who’s behind the ‘WE.’

This article, like too many others on the subject, presupposes that the users of AI are and will be rational and ethical agents living within the confines of the rule of law and “civilized” value systems. In this perspective, it’s meaningful to ask questions such as, “How can we ensure that AI serves the the most constructive interests of humanity?” But one of the most significant features of AI is that it is, in principle, available to everyone on the planet. That’s a diverse group! It includes criminals and terrorists, the seriously disturbed, the ignorant, the disaffected, the mischievous, the heedless, and dedicated pranksters. Even more alarming is the availability of this technology to adversarial regimes. The sinister reality is that there can be no safeguards against pernicious applications of AI, no protective regulatory system, no restraining cultural norms. We are all sitting ducks, as well as potent(ial) malefactors. Voices that predict global catastrophe can hardly be brushed off as alarmist.